Engineer in Computer Science (graduate from Polytech Lyon - french engineering school), Graduate of an Artificial Intelligence Master's degree. I like to help and be helped ! Contact me if you want : sacha{dot}lhopital{at}viseo{dot}com

An AI on the Edge : a Microsoft Azure Story

Custom Vision - A Service to Create your own Images Classifiers and Deploy it

1. Upload images & Tag them

2. Train

3. Deploy…

For our example, choose the DockerFile format (Note : this format is really useful in order to work with other Microsoft Services). You can now run the docker file as usual :

My First Developers Meeting as a Speaker :

Since I start my last project (Distributed AI in IoT Devices), I have to admit that I learn a lot of new things in very different fields : Artificial Intelligence, Mathematic Modelling, Project Architecture, Craftsmanship, IoT, ... This project also gives me the opportunity to experiment a lot : new language, new tools, new methods.

With that kind of experience, I soon got in touch with an association of developers : Microsoft User Group of Lyon (MUG Lyon). Thus, they submitted me to a new challenge : present my project as feedback of my experiences in front of other developers. After some thoughts, I decided to meet this challenge and to deliver my project through a very specific approach : “Are Craftsmanship Good Practices Achievable in an AI and IoT Project ?”.

Why did I say yes ?

This was a great opportunity for me to conciliate the two things I love the most in my work : Artificial Intelligence and Craftsmanship best practices.

When I start my double degree in AI, a lot of people told me that engineering and scientific are two very separate fields that are not mixable. I believe they are wrong since I did it in my current project. Indeed I currently use all of my skills (from all of my oldest experiences) to achieve this project. And I am very proud of that.

There is no reason to reject good practices just because a project involve complex mathematic calculations. Also, It allows the code to be more easily accessible from any developers : no need to be an expert in mathematics.

Last but not least, this was a great opportunity to improve my social skills and my communication capacities. I worked hard on this presentation to present this project as simply as possible, and to produce an accessible speech for anyone. Those kinds of skills are very useful to develop, and I am happy to have tested them on a real professional context.

Thanks the MUG for this great opportunity !

The meetup event :

https://www.meetup.com/fr-FR/MUGLyon/events/250854003/

How To “Clean Code”

1. Start to define what is the most urgent

- Every element of the code should have a meaningful name (file, variable, function, class, …)

- Max indent per method should be 2 or 3

- Use Constants

- Don’t Repeat Yourself

2. SOLID is difficult, but Single Responsible Principle is the key

If there is one that is the most important and the easier to use, it surely is the Single Responsible Principle : a class or a function has one and only one responsibility. Refactoring my code this way helps me achieve a more readable code quickly. This is also very convenient for testing.

3. Don’t lose your mind

4. Don’t forget tests

Et Voilà !

BDD - My Own Specification Sheet

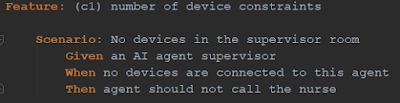

Behavior-Driven Development test method allow me to test really important features without making me test every single line of my code. This method helps me create a minimal code that answer a specific need.

To use this method with my Python code, I use behave. Behave is a framework similar to cucumber that allow me to merge my specifications and my tests. This is really powerful since I can now show my tests results to anyone in my project : everybody can understand what is working, and what is not.

Here is a little illustration.

Behave also provides a lot of different features that can create very particular tests like the concept of "work in progress" scenarios or the use of some step data if needed.

Finally when I run those tests, results are shown in a very understandable way :

Note that the path following the '#' indicate the location of the method associate with the step. It is really useful for refactoring the code and still be able to fix failed tests.

How I Make My First P.O.C

I will not explain here what is a Proof Of Concept or what is it for, but I will try to explain how I proceed in my very specific case.

1. State of the art

- It is one of the fastest in the execution time. It is always a nice advantage.

- Also, the DPOP algorithm is a 100% decentralized method since all agents executes the same code : there are no “intelligent mediator” to manage them. This is really convenient since the system does not rely on a central process.

2. Mathematical aspect

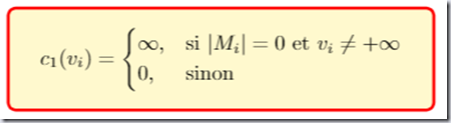

For Mi = {m1, …, ml} the set of devices linked to the agent i, if the agent has no devices, then there is no need to call the nurse.This next function transcribe the following constraint : If two agents are in the same geographical area (= they are neighbors), they can eventually synchronize themselves in order to avoid two interventions in a t_synchro laps of time.

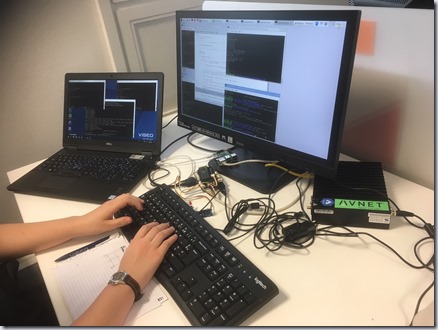

3. Hands on keyboard

3 Things That Inspired Me After #SIdO18

1. AI & IoT to upgrade our process

-

Lower costs and predictive maintenance

-

Improvement of process quality (by using cognitives services that aggregates unstructured and structured datas). For instance, the sound is something becoming more and more important and there are lots of opportunities to explore.

2. AI & IoT : it’s a match !

3. AI & Medical, is the new sexy !

-

The telemedecine (Artificial Intelligence prescribing medications, medical web forums, …)

-

The Big Data use (Which gives a better understanding of the patient and his environment)

-

The augmented reality

-

The augmented patient (with an artificial heart for instance)

Conclusion

Improve the Configuration of Docker Compose with Environment Variables

I recently started working on a new python project (yeah!). This project is really interesting, but the first lines of code are at least a d...

-

I recently started working on a new python project (yeah!). This project is really interesting, but the first lines of code are at least a d...

-

Remember when I started by last internship four months ago ? Well, now is the time to deploy it using Microsoft Azure IoT Edge ! This...

-

A long time ago, I read the Clean Code during my first internship. Immediatly after, I started to apply the global idea hidden in this bib...